AI Alone Won’t Fix Your Supply Chain

Properly integrating AI into your processes can help identify risks and offer proactive insights, but the final decisions must always remain in human hands.

January 10, 2025

.png)

After a few high-profile incidents — Solarwinds and Log4Shell, in particular — software supply chain security became a focus in the industry. In the last couple of years, generative AI has become the hot topic. Why not combine them? They fit well together — like peanut butter and chocolate — when you get the combination right.

As markets are beginning to realize, generative tools are not a cure-all for productivity. Companies are still exploring viable ways to turn these technologies into profit. Simply adding a large language model doesn’t necessarily improve a product or process. In fact, it can sometimes make the situation worse, since many models rely on restricted training data, and there’s no way to determine if the output comes from misinformed, biased, or even maliciously-poisoned data. We’ve written before about the need to secure the supply chain behind AI models.

Accountability & Discipline in AI-Driven Decisions

Even if you have a fully-open AI model, the bigger concern is agency. An IBM document from 1979 (supposedly) said “a computer can never be held accountable; therefore a computer must never make a management decision.” It’s not about being able to punish someone for wrongdoing, but you do need to have accountability in your business decisions. Generative AI models are sometimes cynically called “stochastic parrots” because they produce output with no awareness of the actual meaning.

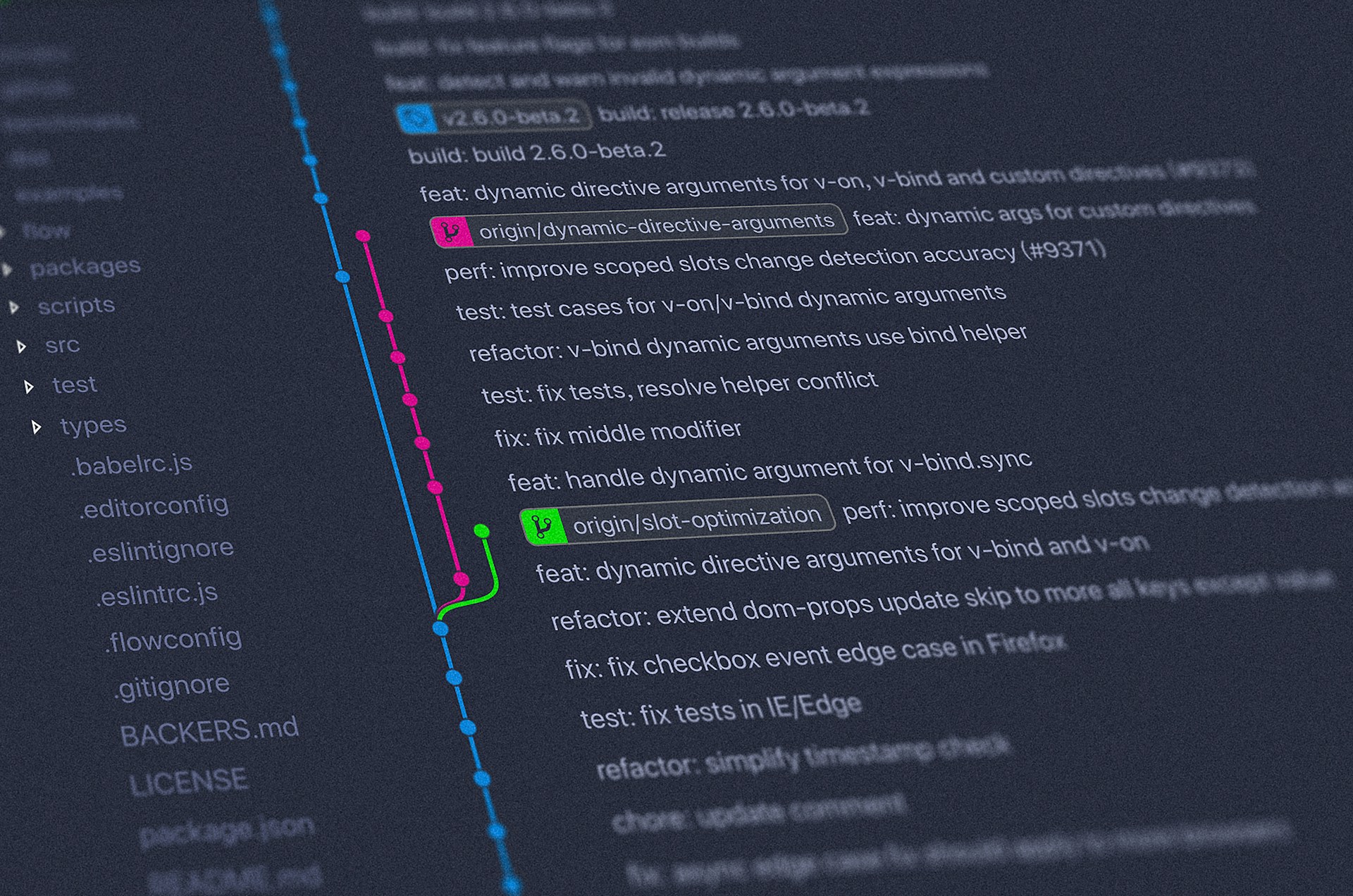

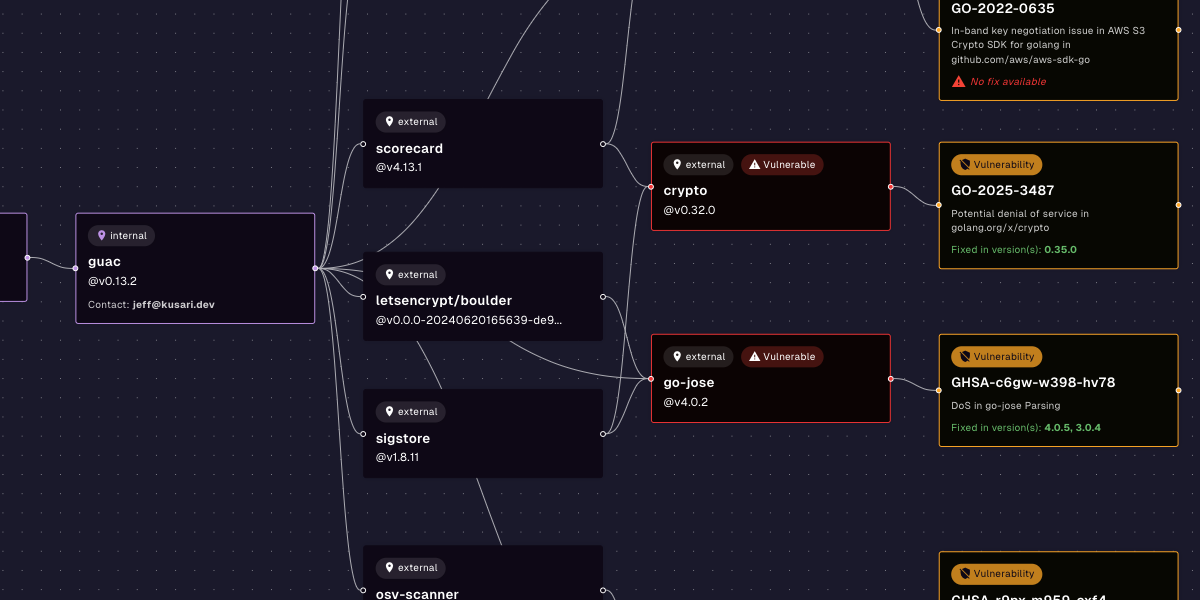

Securing the software supply chain is not a simple, one-time checklist activity. It’s an ongoing process of coordinating a variety of efforts: detecting vulnerabilities, remediating vulnerabilities, proactively managing dependencies, and so on. The technical choices have to be evaluated against business criteria, which vary from company to company and industry to industry. Issues that may be low priority for a mobile game startup could be show-stoppers for highly-regulated industries like banking and healthcare.

You wouldn’t turn over your strategic decisions to an AI model. Hopefully, you wouldn’t turn over your tactical decisions, either. If you let an unsupervised model decide which versions of which libraries to include in your software supply chain, you will end up with unpleasant — and expensive — surprises.

Augment, Don’t Replace: Where AI Shines in Supply Chain Security

“Unsupervised” is the operative word. Where AI shines is as an augment to human capabilities, not a replacement. The human brain is excellent at pattern recognition, but computers can work with much larger data sets and more quickly. They also have more reliable memory. Given some history, an AI model can alert humans to potential issues earlier and suggest likely actions. The computer is not deciding what to do, but giving the human a head start on the process. Using the DKIW pyramid as an example, the AI model can incorporate data (facts) and knowledge (relationships between facts) so that the human experts can get a head start on information and wisdom.

This means that there’s a place for AI in your supply chain security work, but it’s not generative AI from large language models;, it’s the sort of machine learning that companies have been using for years. If you have a credit card, you’ve probably experienced fraudulent charges. Sometimes you have to report it yourself, but more and more, you probably find out about it when you get a notification from your bank. The bank doesn’t automatically close your account, they contact you to verify the suspect charges. In your supply chain, machine learning models can flag suspicious dependencies, dangerous code patterns, et cetera, and then flag those issues to the appropriate development, operations, or security teams for manual verification and intervention.

There’s no quick solution to securing your software supply chain, no matter how much AI is involved, but you can use AI in the right places to give yourself a head start.

Previous

No older posts

Next

No newer posts

.webp)

.webp)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.jpg)